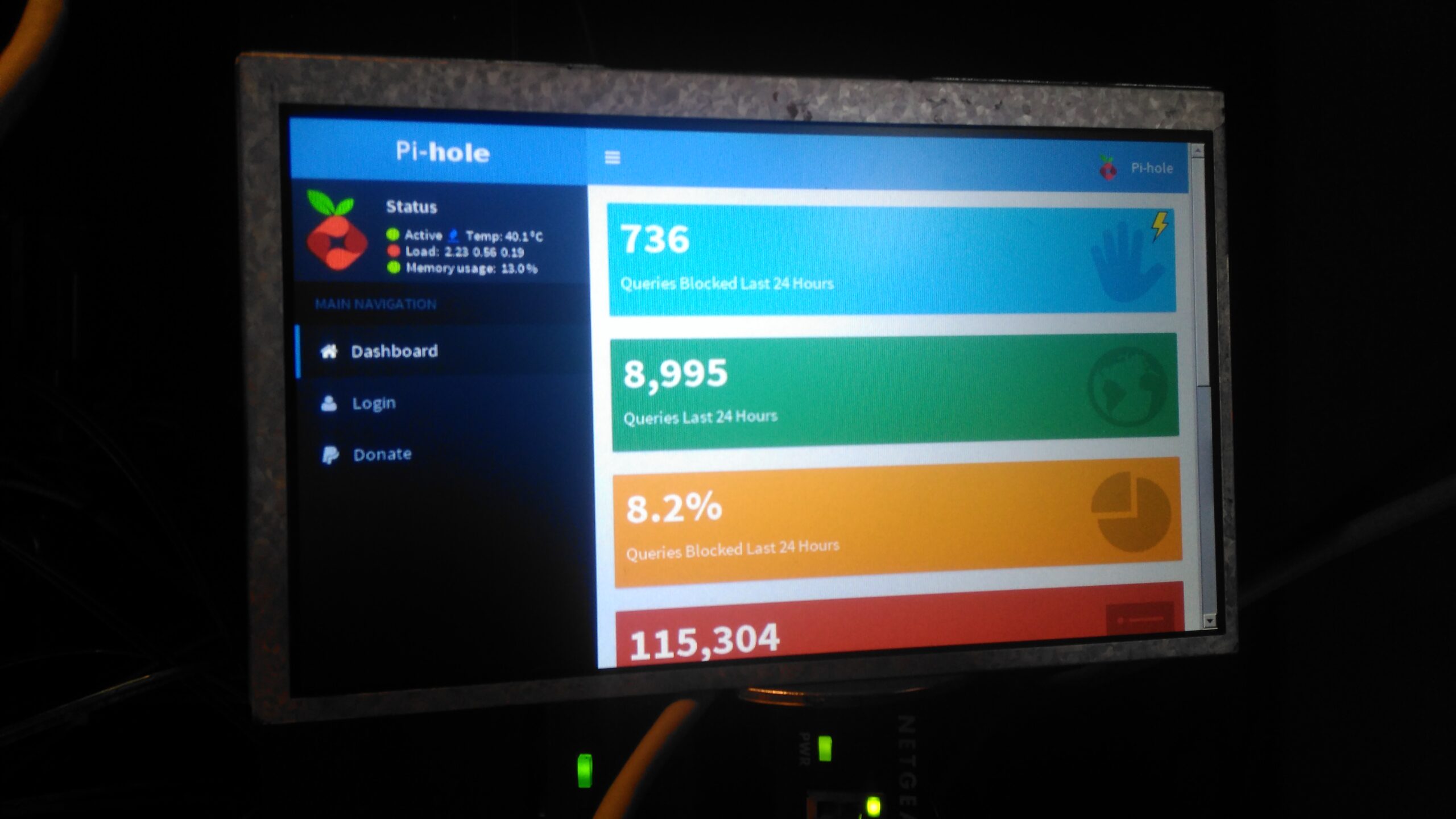

On my home network I have a system running PiHole – a DNS server that blocks all unwanted traffic, such as ads. Since I have an official Pi LCD with a broken touch panel, I decided to use the bare LCD as a status display for PiHole.

This requires some extra packages installing onto the base system after PiHole is installed & configured, and the interface automatically starts on bootup. I used the latest Raspbian Jessie Minimal image for this system, and ran everything over a SSH connection.

First thing, get the required packages installed onto the Pi:

sudo apt-get update sudo apt-get install -y midori matchbox unclutter x11-xserver-utils xinit xserver-xorg

Once these are installed, it’s time to configure the startup script for Midori to display the status page. Create StartMidori.sh in /home/pi and fill with the following:

#!/bin/sh export DISPLAY=:0 xset -dpms xset s off xset s noblank unclutter & matchbox-window-manager & midori -e Fullscreen -a http://127.0.0.1/admin/

This script disables all power management on the system to keep the LCD on, starts unclutter to hide the mouse pointer and finally starts the Matchbox Window Manager to run Midori, which itself is set to fullscreen mode, and the URL of the admin panel is provided.

The next step is to test, give the script executable permissions, and run the script:

chmod +x /home/pi/StartMidori.sh sudo xinit /home/pi/StartMidori.sh

Once this is run, the LCD should come to life after a short delay with the PiHole stats screen. Close the test & return to the terminal by hitting CTRL+C.

Now the Pi can be configured to autorun this script on boot, the first thing to do here is to enable autologin on the console. This can be done with raspi-config, select Option 3 (Boot Options), then Option B1 (Desktop/CLI), then Option B2 (Console Autologin). When prompted to reboot, select No, as we’ll be finishing off the config before we reboot the system.

The next file to edit is /etc/rc.local, add the command to start the status browser up:

#!/bin/sh -e # # rc.local # # This script is executed at the end of each multiuser runlevel. # Make sure that the script will "exit 0" on success or any other # value on error. # # In order to enable or disable this script just change the execution # bits. # # By default this script does nothing. # Print the IP address _IP=$(hostname -I) || true if [ "$_IP" ]; then printf "My IP address is %s\n" "$_IP" fi sudo xinit /home/pi/StartMidori.sh & exit 0

Here I’ve added in the command just above “exit 0”. This will start the browser as the last thing on bootup. The Pi can now be rebooted, and the status display should start on boot!